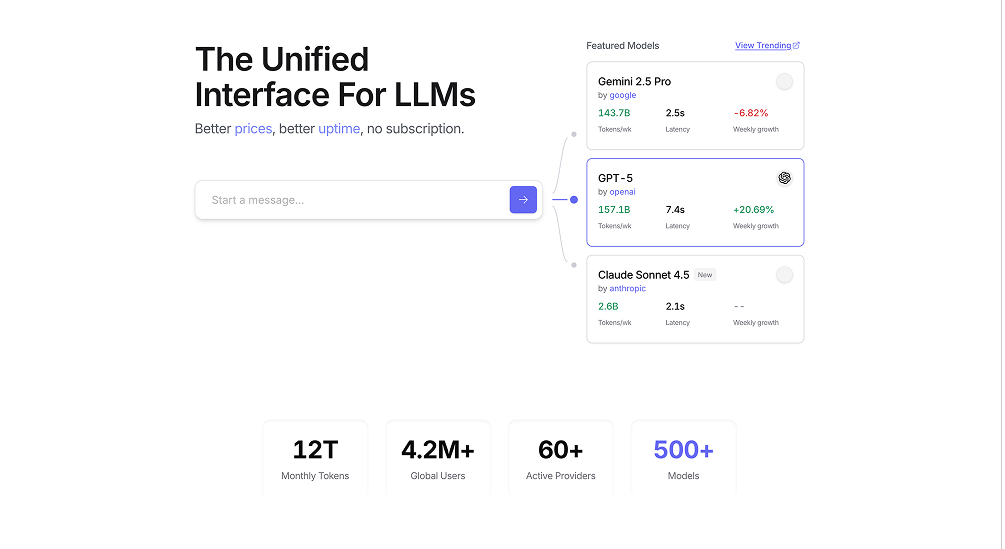

Stop building your AI application on a single point of failure. OpenRouter is the universal API layer for large language models, providing access to over 100 of the world’s best AI models—including GPT-4o, Claude 3 Opus, and Llama 3—through a single, OpenAI-compatible endpoint. Developers who build on OpenRouter can reduce their AI costs by 30-50% and achieve near-perfect uptime by eliminating vendor lock-in and leveraging intelligent, real-time model routing.

Why OpenRouter is the Strategic Choice for AI Developers

Universal Access, Zero Refactoring: With OpenRouter, you can switch from GPT-4 to Claude 3 with a single parameter change, not a week of refactoring. This massive selection of models, all accessible through a unified API, allows you to use the best tool for every job—the most powerful model for complex reasoning, the fastest for conversational AI, and the cheapest for simple tasks. This flexibility is a profound strategic advantage.

Engineered for Unbreakable Reliability: When a single provider like OpenAI has an outage, applications built directly on their API go down. Applications built on OpenRouter don’t. With automatic model fallbacks, OpenRouter intelligently reroutes failed requests to the next-best available model, ensuring your service remains online and operational. This is how you build production-grade AI applications that your users can depend on.

Radical Cost Optimization: Don’t pay for a sledgehammer when you need a fly swatter. OpenRouter’s intelligent routing allows you to automatically direct requests to the most cost-effective model that can successfully complete the task. By routing simple classification tasks to cheap, open-source models and reserving expensive, frontier models for complex reasoning, teams can slash their AI operational costs by 30-50% without sacrificing quality.

The Metrics That Define a Smarter AI Stack

- Model Flexibility: Access 100+ models from a dozen providers through one integration.

- Uptime: Achieve >99.9% effective uptime with automatic provider fallbacks.

- Development Velocity: Switch between any two models in under 5 minutes.

- Cost Reduction: Lower your monthly AI spend by 30-50% through intelligent, real-time routing.

Who Executes with OpenRouter

The Ideal Customer Profile:

- AI-native startups building products where model choice is a competitive advantage.

- Established companies looking to add AI features without getting locked into a single vendor.

- Developers and teams that prioritize application resilience, cost-efficiency, and future-proofing.

- Anyone who has experienced the pain of a provider-specific API outage.

The Decision-Makers:

- CTOs and Heads of Engineering who are designing a resilient and scalable AI architecture.

- AI and Machine Learning Engineers who need to experiment with and deploy multiple models.

- Product Managers who need to balance performance, cost, and reliability for their AI features.

- Founders who understand that strategic technical decisions made today will have a massive impact tomorrow.

Common Use Cases That Drive ROI

Building a Provider-Agnostic Application: A startup builds an AI-powered writing assistant. They use OpenRouter to allow their users to choose between GPT-4o for maximum creativity or Claude 3 Haiku for speed and cost-effectiveness. This flexibility becomes a key product differentiator.

High-Availability AI Service: A company’s core product relies on an AI-powered API. They use OpenRouter’s fallback feature to ensure that if their primary model (e.g., from OpenAI) goes down, requests are automatically rerouted to a backup (e.g., from Anthropic or Google), resulting in zero downtime for their customers.

Intelligent Cost-Saving Router: A developer builds a chatbot that handles a high volume of requests. They configure OpenRouter’s “auto” router to send simple, conversational queries to a fast, cheap model like Llama 3 and only escalate complex, analytical queries to an expensive model like Claude 3 Opus. This strategy cuts their monthly bill in half.

Critical Success Factors

The Pricing Reality Check:

- No Subscription Fees: You only pay for what you use. The platform is free.

- Pay-per-use: You pre-purchase credits and pay the base rate for each model plus a small, transparent markup.

- Credit Purchase Fee: There is a 5.5% fee for card payments and a 5% fee for crypto.

- The ROI: The cost savings from intelligent routing and the monetary value of increased uptime almost always dwarf the small platform fees.

Implementation Requirements:

- Trivial for OpenAI Users: If you’re already using the OpenAI SDK, migrating to OpenRouter takes less than 5 minutes. You simply change the

base_urlto point to OpenRouter’s endpoint. - Strategic Model Selection: The real work is in understanding the strengths and weaknesses of different models and designing a routing strategy that aligns with your product goals.

The Integration Ecosystem

A Universal Translator for AI: OpenRouter is designed to be the central hub for all your AI interactions.

- Model Providers: Seamless access to models from OpenAI, Anthropic, Google, Meta, Mistral, Cohere, and dozens of open-source providers.

- Developer Frameworks: Works out-of-the-box with any OpenAI-compatible SDK, as well as popular frameworks like LangChain and LlamaIndex.

The Bottom Line

Choosing an AI provider is one of the most critical decisions a developer can make. Building your application directly on a single, proprietary API is a strategic error that introduces fragility, vendor lock-in, and massive future switching costs.

OpenRouter is the definitive solution. It is a tactical layer that sits between your application and the entire AI ecosystem, giving you the power to choose the best model for any task, ensuring your service never goes down, and optimizing your costs in real time. For any developer or company that is serious about building a professional, production-grade AI application, it is the only logical choice.