Google Maps Lead Generation: The Automated Prospecting Engine

An automated lead generation system that extracts business data from Google Maps and validates email addresses using a cost-optimized, multi-service pipeline.

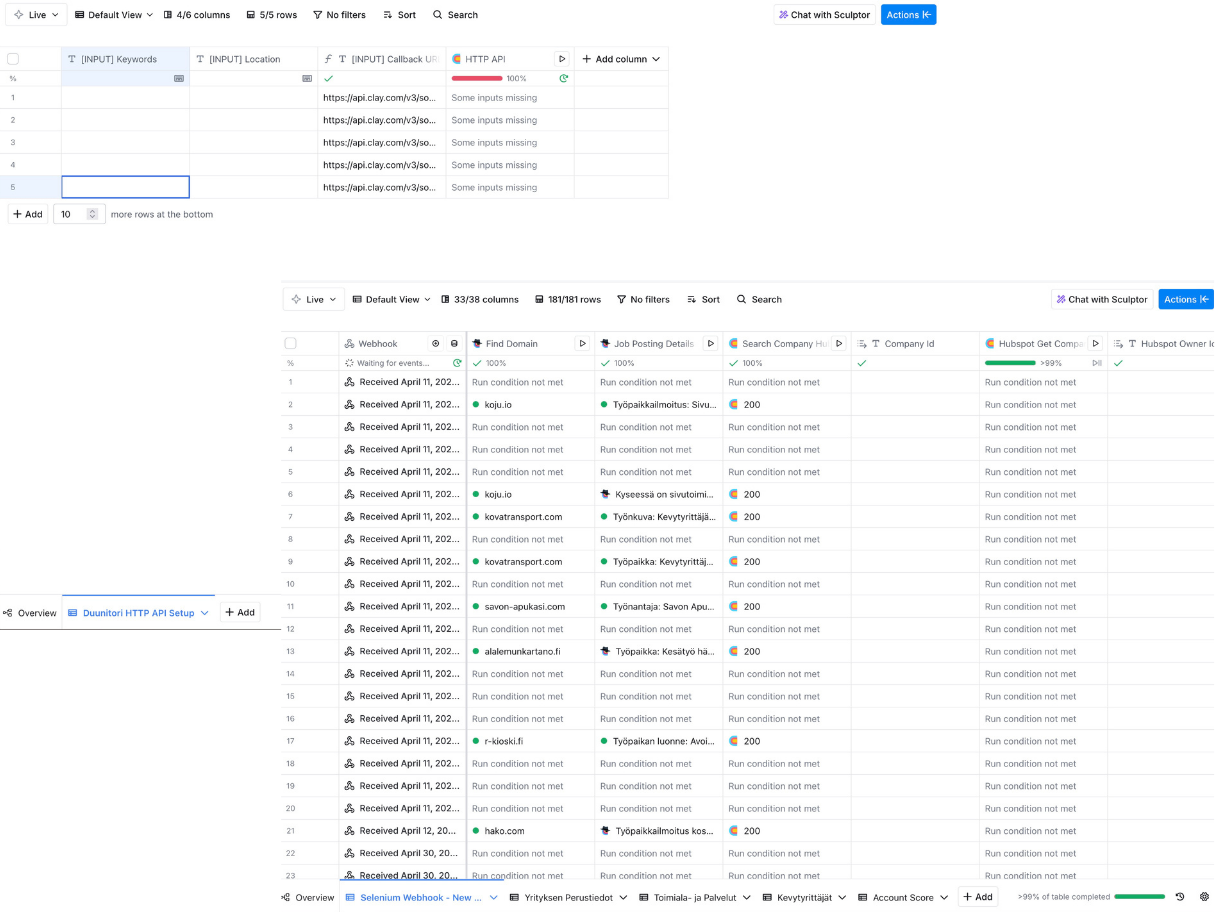

A scalable, event-driven web scraping service built on Google Cloud Platform that automatically collects job listings from Finland's largest job portal, featuring a microservices architecture and real-time data processing.

You need to extract data from the web. It seems simple at first. You write a script. It works.

Then you try to scale it.

Your script gets blocked. The website’s structure changes, and your parser breaks. You need to run multiple jobs at once, and your single server crashes. You’ve spent more time debugging and firefighting than you have collecting actual data.

The real problem isn’t just writing a scraper. It’s building a resilient, scalable, production-grade platform that can handle the hostile, ever-changing environment of the modern web.

You don’t need a fragile script. You need an industrial-strength system.

This project delivers that system: a cloud-native, event-driven web scraping platform built on a microservices architecture. It’s designed from the ground up to be scalable, resilient, and fully automated—turning the brittle nature of web scraping into a reliable, enterprise-grade data pipeline.

Here’s the framework that makes it production-ready.

This isn’t a single, monolithic application. It’s a decoupled assembly line where each component does one job perfectly.

Scraping a modern, JavaScript-heavy website requires more than just fetching HTML. You need to look like a human.

This entire system is built for production. That means no manual deployments and no guessing games.

This project provides the definitive blueprint for building a serious, scalable web scraping platform. It moves beyond simple scripts and demonstrates how to use modern cloud-native principles—microservices, event-driven architecture, and IaC—to solve a complex, real-world data extraction problem.

It’s the difference between a tool that works today and a platform that works every day.